Your AI Isn’t Slow. Your Data Is.

Slow AI pipelines aren’t caused by the model—they’re caused by the data layer beneath it. In this guide, we break down the hidden bottlenecks slowing your RAG and LLM applications: poor chunking, missing metadata, unindexed documents, slow vector retrieval, and more. You’ll learn how to redesign your data architecture for faster retrieval, lower token usage, and dramatically better AI performance. If your AI feels slow, this step-by-step blueprint will help you fix it.

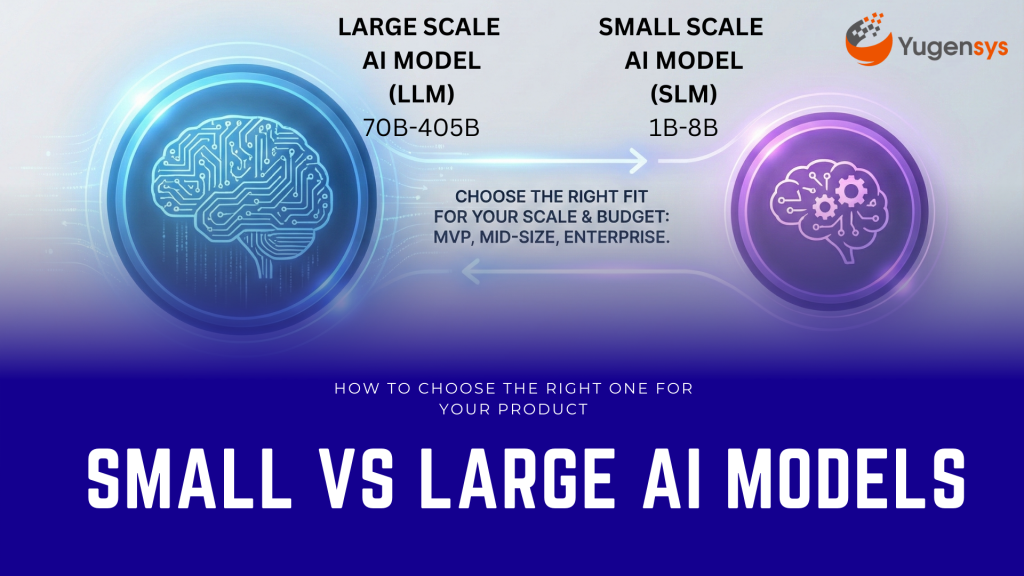

Small vs Large AI Models: How to Choose the Right One for Your Product

Choosing the right AI model isn’t about picking the biggest or most powerful LLM—it’s about choosing the one that fits your product’s scale, cost, and performance needs. This guide breaks down model selection across small, mid, and large-scale deployments, with clear recommendations for startups, growing products, and enterprise-grade systems. Learn when to use lightweight 7B models, fine-tuned 30B models, or high-end 70B+ models, and how to balance accuracy, latency, cost, and compliance to make the smartest AI architecture decisions.

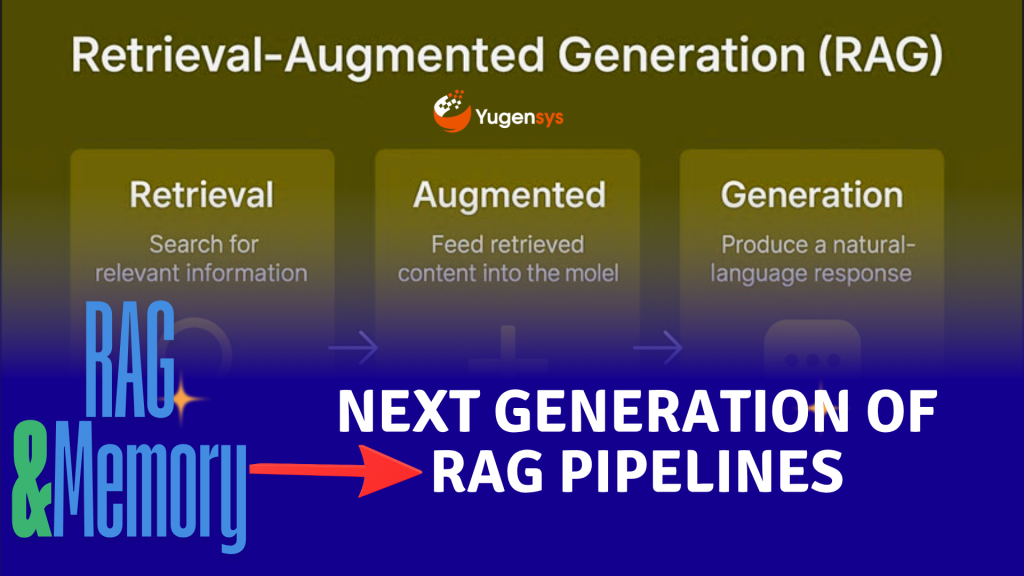

RAG → Agentic RAG → Agent Memory : Smarter Retrieval, Persistent Memory

The shift from RAG to Agentic RAG and now to Agent Memory marks a deeper architectural change in how AI systems process and evolve with information. What began as static retrieval has grown into intelligent reading and now, adaptive read–write memory. This progression moves AI from simply pulling knowledge to actively building and refining it through interaction.