How to Create Smart Agents: A Guide to AI Architectures

Table of Contents

These designs help ensure that agents can handle complex tasks, adapt to new situations, and work well with other systems, making them more reliable and efficient. Agent architectures provide a structured way to develop intelligent agents, allowing them to manage intricate tasks, scale up their capabilities, and integrate seamlessly with various technologies. This structured approach is essential for creating agents that can learn from their experiences, respond to changing environments, and perform a wide range of functions, from simple repetitive tasks to complex problem-solving.

Introduction

In the context of artificial intelligence (AI), there is a significant need for intelligent agents to automate and enhance various processes. These agents can perform tasks that would be time-consuming, complex, or even impossible for humans to do efficiently. For instance, intelligent agents can help in areas like healthcare by monitoring patient data and suggesting treatments, in customer service by providing instant responses and solutions, or in smart homes by managing energy usage and security systems. They are also crucial in fields such as finance for analyzing market trends and making trading decisions. By using intelligent agents, we can leverage AI to improve productivity, accuracy, and decision-making across different sectors, ultimately leading to smarter, more responsive, and adaptive systems that can better serve human needs.

In this blog post, we will explore five types of agent architectures:

Simple Reflex Agents

Model-Based Agents

Goal-Based Agents

Utility-Based Agents

Learning Agents

We will go through each type in detail, accompanied by graphical representations, Python functions, practical use cases, and benefits.

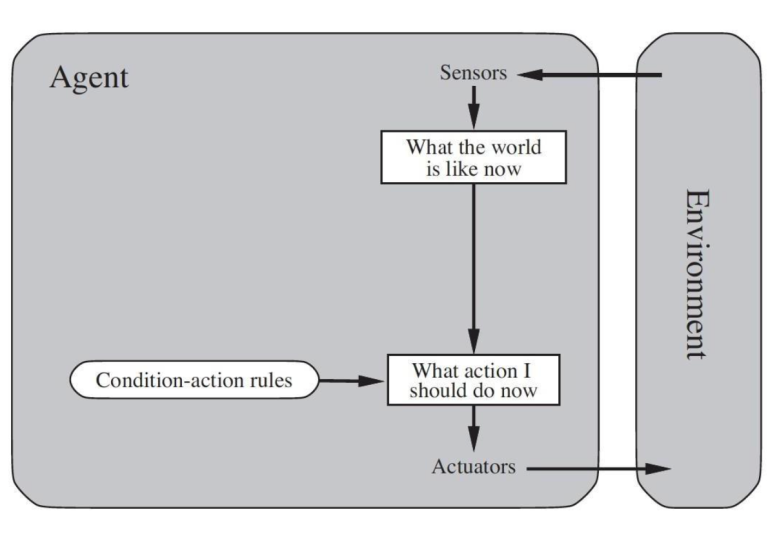

Simple Reflex Agents

Simple Reflex Agents act based on the current percept (input from the environment) without considering the history of percepts.

They operate based on a set of condition-action rules, also known as production rules. These rules map specific percepts directly to corresponding actions, without considering the history of percepts. For example, a simple reflex agent designed to vacuum might have a rule that says, “If the current percept indicates dirt, then vacuum.”

- How It Works: The agent’s decision-making process is straightforward: it observes the environment through sensors, checks the current percept against its set of rules, and executes the corresponding action.

- Strengths: Simple reflex agents are fast and efficient in well-defined, predictable environments where the necessary actions can be easily codified into rules.

- Weaknesses: These agents struggle in dynamic or partially observable environments where decisions need to consider context or history. They cannot learn from experience or adapt to new situations.

Practical Use Case

Automated Vacuum Cleaners

Automated vacuum cleaners, like the Roomba, are a classic example of simple reflex agents. These devices use sensors to detect obstacles and dirt, and they follow predefined rules to navigate and clean the floor. For instance, “If dirt detected, then vacuum; if obstacle detected, then change direction.” This model fits perfectly because the task of vacuuming is repetitive and predictable, requiring straightforward, immediate responses rather than complex decision-making or learning.

class SimpleReflexAgent: def __init__(self, rules): self.rules = rules def get_action(self, percept): for condition, action in self.rules.items(): if condition(percept): return action return None # Example usage def condition_light_is_red(percept): return percept == 'red' def action_stop(): return "Stop" rules = {condition_light_is_red: action_stop} agent = SimpleReflexAgent(rules) print(agent.get_action('red')) # Output: Stop

Explanation

Above Python block defines a simple reflex agent designed to operate a vacuum cleaner.

The SimpleReflexAgent class has a set of condition-action rules stored in a dictionary. The get_action method takes a percept (input from the environment) and returns the corresponding action based on the predefined rules. If the percept is ‘dirt’, the action is ‘vacuum’; if the percept is ‘obstacle’, the action is ‘turn’. For any other percept, the default action is ‘move_forward’.

This code exemplifies how a simple reflex agent makes immediate decisions based solely on the current percept, suitable for predictable environments.

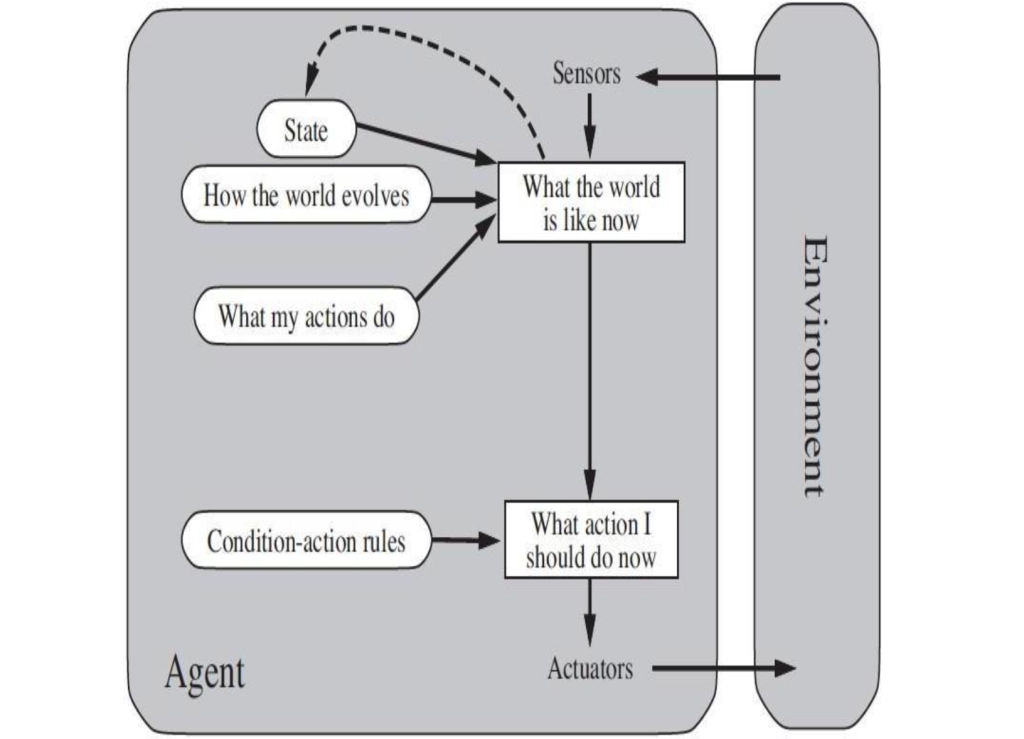

Model-Based Agents

Model-Based Agents improve upon simple reflex agents by maintaining an internal model of the world. This model helps the agent keep track of unobservable aspects of the current state, based on the history of percepts.

- How It Works: The agent updates its internal state based on the percept history and uses this state, along with the current percept, to make decisions. For instance, if the agent knows the layout of a maze, it can infer its current location even if it can’t see the entire maze.

- Strengths: Model-based agents can operate effectively in partially observable environments. They can handle more complex tasks because they maintain an understanding of the environment beyond immediate perceptions.

- Weaknesses: Developing and maintaining an accurate internal model can be challenging and computationally expensive. The agent’s effectiveness depends on the accuracy of its model, which may not always be perfect.

Practical Use Case

Autonomous Drones

Autonomous drones used for surveillance or delivery operate effectively as model-based agents. They maintain an internal map of their environment, updating it with new percepts from their sensors. This allows them to navigate around obstacles, adjust their flight paths, and complete their missions even when they can’t see their entire surroundings. The model-based architecture is ideal here because it allows the drone to infer unseen parts of the environment and make informed decisions in partially observable conditions

class ModelBasedAgent: def __init__(self, model): self.model = model self.state = None def update_state(self, percept): self.state = self.model.update(percept) def get_action(self): return self.model.get_action(self.state) # Example usage class SimpleModel: def update(self, percept): return percept # In a real scenario, this would be more complex def get_action(self, state): if state == 'red': return"Stop" return"Go" model = SimpleModel() agent = ModelBasedAgent(model) agent.update_state('red') print(agent.get_action()) # Output: Stop

Explanation

This Python block defines a model-based agent. The ModelBasedAgent class maintains an internal model of the environment, stored as a dictionary.

The update method updates this model based on new percepts. For instance, if the agent’s location is ‘unknown’, it decides to ‘explore’; if there is an obstacle, it decides to ‘navigate_around’; otherwise, it ‘moves_forward’.

This demonstrates how a model-based agent can handle more complex and partially observable environments by maintaining and using an internal representation of the world.

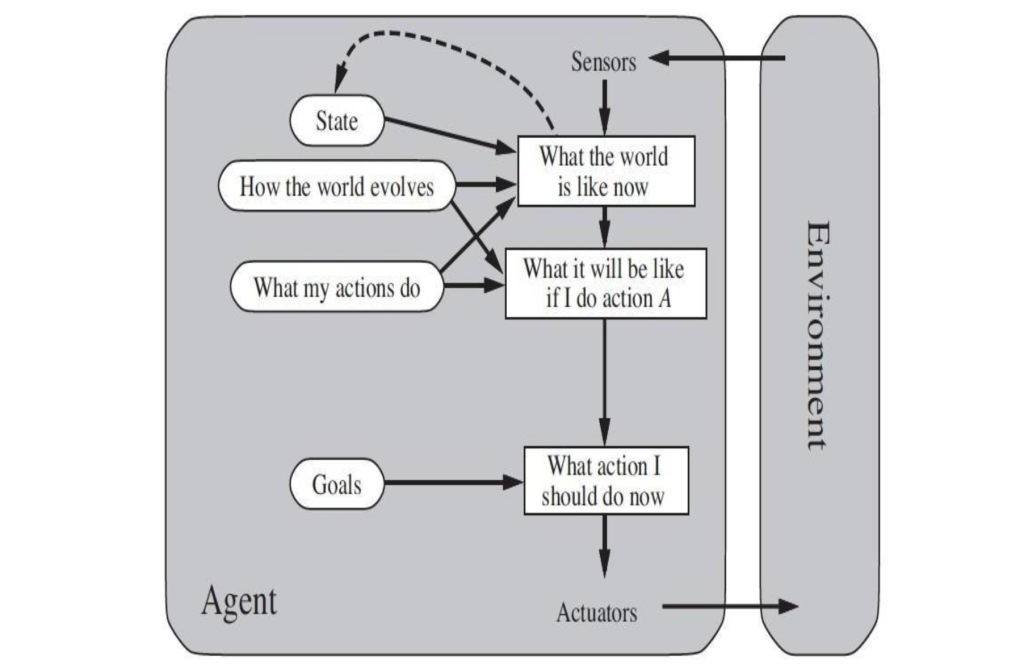

Goal-Based Agents

Goal-Based Agents use goals to drive their actions. These agents are not limited to immediate responses but can plan actions to achieve long-term objectives. Goals provide a way to prioritize actions and make decisions that are beneficial in the long run.

- How It Works: The agent uses its current state, derived from percepts and its internal model, to evaluate possible actions based on their potential to achieve its goals. It might use search and planning algorithms to find sequences of actions that lead to goal fulfillment.

- Strengths: Goal-based agents can handle complex tasks requiring strategic planning and decision-making. They are flexible and can adjust their behavior to pursue different goals.

- Weaknesses: Planning and decision-making can be computationally intensive, especially in large or complex environments. These agents require significant processing power and sophisticated algorithms to evaluate and choose actions effectively.

Practical Use Case

Robotic Assistants

Robotic assistants in healthcare, such as those used in elderly care or hospital settings, exemplify goal-based agents. These robots have specific goals, such as delivering medication, assisting with mobility, or monitoring vital signs. They use their understanding of the environment to plan and execute actions that achieve these goals. The goal-based architecture is suitable because it enables the robot to prioritize tasks, plan effectively, and make decisions that align with its long-term objectives of patient care and assistance.

class GoalBasedAgent: def __init__(self, goals): self.goals = goals def get_action(self, state): for goal in self.goals: if goal.is_satisfied(state): return goal.action return None # Example usage class Goal: def __init__(self, condition, action): self.condition = condition self.action = action def is_satisfied(self, state): return self.condition(state) def condition_reach_destination(state): return state == 'destination' def action_celebrate(): return "Celebrate" goal = Goal(condition_reach_destination, action_celebrate) agent = GoalBasedAgent([goal]) print(agent.get_action('destination'))

# Output: CelebrateExplanation

The agent is initialized with a list of goals, each defined by a condition and an action. In this example, when the agent’s state is ‘destination’, it triggers the action to “Celebrate”.This approach allows the agent to make decisions based on long-term objectives, ensuring that its actions are aligned with its goals.

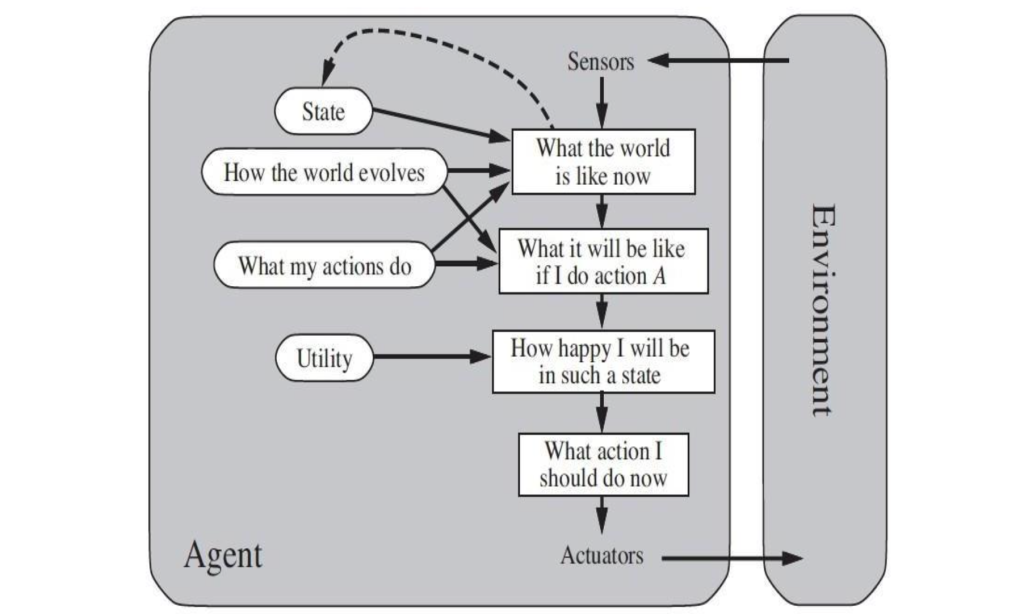

Utility-Based Agents

Utility-Based Agents extend goal-based agents by incorporating a utility function that measures the desirability of different states. This allows the agent to quantify the trade-offs between competing goals and choose actions that maximize overall utility.

- How It Works: The agent evaluates each potential state based on its utility function, which assigns a numerical value to each state. The agent then selects actions that maximize its expected utility, considering both immediate and future states.

- Strengths: Utility-based agents can make nuanced decisions that balance multiple objectives. They provide a flexible framework for decision-making, allowing agents to optimize their behavior in complex environments.

- Weaknesses: Defining an appropriate utility function can be difficult, as it must accurately reflect the agent’s preferences and trade-offs. These agents often require substantial computational resources to evaluate and maximize utility across different states and actions.

Practical Use Case

Financial Trading Systems

Financial trading systems are prime examples of utility-based agents. These systems analyze market data and execute trades based on a utility function that maximizes profit while managing risk. They must balance multiple factors such as market trends, economic indicators, and risk tolerance. Utility-based architecture fits well because it allows the agent to evaluate and optimize different outcomes, ensuring the best possible financial decisions in a complex and dynamic market environment.

class UtilityBasedAgent: def __init__(self, utility_function): self.utility_function = utility_function def get_action(self, state): actions = self.utility_function.get_actions(state) best_action = max(actions, key=lambda action: self.utility_function.evaluate(state, action)) return best_action # Example usage class UtilityFunction: def get_actions(self, state): return ["action1", "action2"] def evaluate(self, state, action): if action == "action1": return 10 # In a real scenario, this would be more complex return 5 utility_function = UtilityFunction() agent = UtilityBasedAgent(utility_function) print(agent.get_action('state'))

# Output: action1

Explanation

UtilityBasedAgent class is initialized with a UtilityFunction object, which provides methods to retrieve possible actions and evaluate their utility. The get_action method in UtilityBasedAgent retrieves all possible actions for the current state and then selects the one with the highest utility by comparing the values returned by the evaluate method of the UtilityFunction class.

In the example, “action1” is chosen over “action2” because it has a higher utility score, demonstrating how the agent uses utility-based decision-making to optimize outcomes. Learning Agents

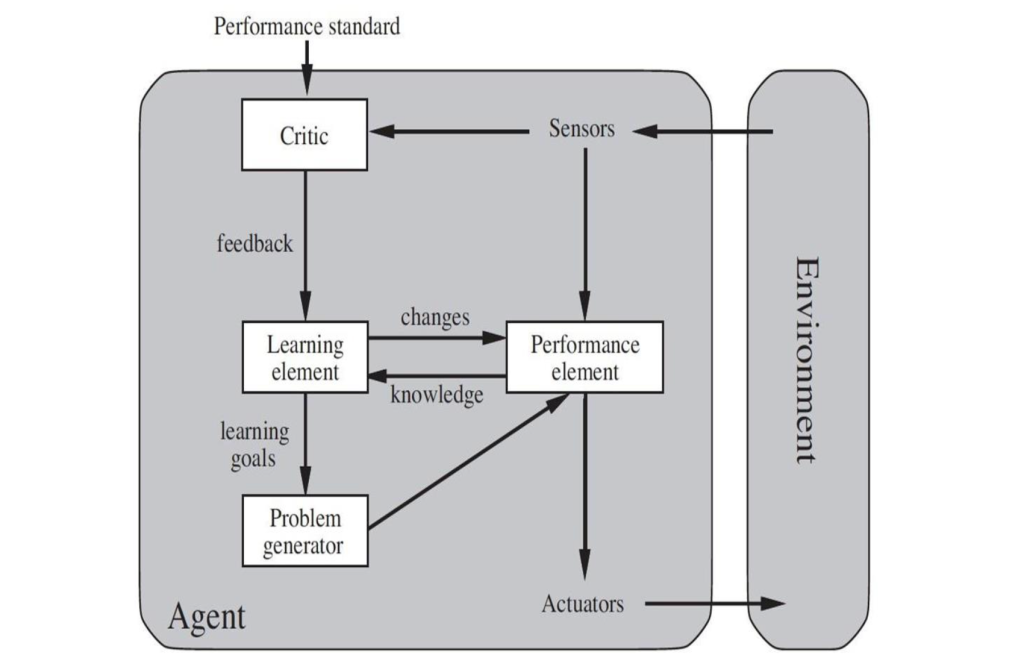

Learning Agents are designed to improve their performance over time by learning from their experiences. They have several components: a learning element that adapts based on feedback, a performance element that makes decisions, a critic that evaluates the agent’s performance, and a problem generator that suggests new experiences for learning.

- How It Works: The learning element updates the agent’s knowledge and strategies based on feedback from the critic, which assesses the outcomes of the agent’s actions. The performance element uses this updated knowledge to make better decisions. The problem generator introduces new scenarios for the agent to explore and learn from.

- Strengths: Learning agents are highly adaptable and can improve in dynamic and unknown environments. They can handle a wide range of tasks and continuously refine their behavior based on new information.

- Weaknesses: Learning can be slow and resource-intensive, requiring significant amounts of data and time. Designing effective learning algorithms and feedback mechanisms can be complex, and the agent may need to undergo extensive training to achieve high performance.

Practical Use Case

Recommendation Systems

Personalized recommendation systems, like those used by Netflix or Amazon, operate effectively as learning agents. These systems learn from user behavior, preferences, and feedback to provide tailored recommendations. They continuously improve their suggestions based on new data, ensuring a better user experience. The learning agent architecture is ideal for this application because it allows the system to adapt to individual user preferences, enhancing engagement and satisfaction over time.

class LearningAgent: def __init__(self, learning_algorithm): self.learning_algorithm = learning_algorithm self.knowledge_base = {} def learn(self, experience): self.learning_algorithm.update(self.knowledge_base, experience) def get_action(self, state): return self.learning_algorithm.decide(self.knowledge_base, state) # Example usage class LearningAlgorithm: def update(self, knowledge_base, experience): knowledge_base[experience['state']] = experience['action'] def decide(self, knowledge_base, state): return knowledge_base.get(state, "default_action") learning_algorithm = LearningAlgorithm() agent = LearningAgent(learning_algorithm) agent.learn({'state': 'situation1', 'action': 'action1'}) print(agent.get_action('situation1')) # Output: action1

Explanation

This Python code defines a learning agent that improves its decision-making over time using a learning algorithm.

The LearningAgent class is initialized with a LearningAlgorithm object and maintains a knowledge_base to store learned experiences. The learn method updates this knowledge base based on new experiences provided to it. The get_action method retrieves the best action for a given state by querying the knowledge base, using the decide method of the LearningAlgorithm class.

In the example, the LearningAlgorithm class updates the knowledge base with state-action pairs and retrieves actions based on the state. After learning from an experience where “situation1” maps to “action1,” the agent correctly returns “action1” when queried with “situation1,” demonstrating its ability to make decisions based on learned data.

Conclusion

Each intelligent agents are crucial for automating and enhancing a wide array of tasks. From simple reflex agents that operate based on predefined rules, to sophisticated learning agents that adapt and improve over time, each type of agent architecture serves a unique purpose. Simple reflex agents excel in predictable environments with straightforward tasks, while model-based agents handle more complex, partially observable scenarios by maintaining an internal model of the world. Goal-based agents bring strategic planning into play, aiming to achieve long-term objectives, whereas utility-based agents optimize decisions based on a utility function that balances multiple goals. Learning agents stand out for their ability to adapt and improve through experience, making them versatile and robust in dynamic environments.

These diverse agent architectures are essential for building effective AI systems that can tackle a broad spectrum of challenges. By leveraging the strengths of each architecture, we can create intelligent agents that are capable of handling everything from routine tasks to complex problem-solving, ultimately leading to more efficient, responsive, and adaptive systems. Whether in healthcare, customer service, smart homes, finance, or other fields, intelligent agents enhance productivity, accuracy, and decision-making, driving innovation and improving quality of life.

As the Tech Co-Founder at Yugensys, I’m driven by a deep belief that technology is most powerful when it creates real, measurable impact.

At Yugensys, I lead our efforts in engineering intelligence into every layer of software development — from concept to code, and from data to decision.

With a focus on AI-driven innovation, product engineering, and digital transformation, my work revolves around helping global enterprises and startups accelerate growth through technology that truly performs.

Over the years, I’ve had the privilege of building and scaling teams that don’t just develop products — they craft solutions with purpose, precision, and performance.Our mission is simple yet bold: to turn ideas into intelligent systems that shape the future.

If you’re looking to extend your engineering capabilities or explore how AI and modern software architecture can amplify your business outcomes, let’s connect.At Yugensys, we build technology that doesn’t just adapt to change — it drives it.

Subscrible For Weekly Industry Updates and Yugensys Expert written Blogs